Showing posts with label neuroscience. Show all posts

Showing posts with label neuroscience. Show all posts

Saturday, June 19, 2010

David O'Reilly on animating movement

I think the bit on movement trajectories in animation is brilliant. Natural human movements also follow this kind of smooth trajectory; studies of motor planning (eg here) have found that this sort of movement is optimally designed to minimize energy expenditure. It’s so great when artists figure out how to convey different feelings just by making these subtle tweaks to the textures and physics of the real world, I think it can really tell us a lot about our own perception.

Also: vectorpunk? Seriously, BoingBoing? Vectorpunk.

Labels:

art,

modeling,

neuroscience

Monday, May 17, 2010

Hemispatial neglect

Drawings copied by a patient with allocentric hemispatial neglect, in which damage to attention centers in the frontal or temporal lobes causes subjects to only perceive one half of every object.

Labels:

neuroscience

Tuesday, December 15, 2009

Algorithm Design

In scientific computing, optimality of algorithms is not always something which receives full consideration by users-- if you want to run a sort or solve some combinatorial problem, you are more concerned with making the algorithm work than looking into how fast it runs. But in dealing with very large data sets, reducing the limiting behavior of your algorithm from N^2 to NlogN can reduce your runtime from something on the order of years to something on the order of seconds. So while there are many computational problems out there for which solutions are known to exist, the practical matter of implementing such solutions is so expensive that they are effectively useless-- but if a new algorithm could be found which would reduce their runtime, we might suddenly be able to use them.

This is the driving motivation behind much of the work that goes into quantum computing. Because bit state in a quantum computer is probabilistic rather than binary, the computer operates in a fundamentally different way, and we can design algorithms which take such differences into account. One vivid example is Grover's Algorithm for searching an unsorted array. Here's a good description from Google labs:

So if you were opening one drawer a second, the traditional algorithm would take you an average of six days to run, while the quantum algorithm would take you a little under 17 minutes.

(Now, say each of those million drawers represented a different combination of letters and numbers, and you were trying to find the drawer/combination which corresponded to the password to someone's email account. Encryption standards which would be secure against attacks from a traditional computer are easily bypassed by quantum algorithms.)

While quantum computing still has a ways to go, parallel programming is already providing another alternative to traditional computer architecture. In parallel programming, you split your code up and send it to a number of computers running simultaneously (for our million-drawer problem: say you had 9 other people to help you, you could each search a different set of 100,000 drawers and it would only take 50,000 steps on average for the ball to be found.) So the trick in parallel programming is to figure out the right way to eliminate all the bottlenecks in your code and split up your task across processors as efficiently as possible.

Now, what about a task like image recognition? If you had a couple thousand processors at your disposal and a single image to feed them, what is the most efficient way for your processors to break up that image so that between them they can reconstruct an understanding of what it depicts? You might decide to give each computer a different small piece of the image, and tell it to describe what it sees there-- maybe by indicating the presence or absence of certain shapes within that piece. Then have another round of computers look at the output of this first batch and draw more abstract conclusions-- say computers 3, 19, and 24 all detected their target shape, so that means there's a curve shaped like such-and-such. And continue upwards with more and more tiers representing higher and higher levels of abstraction in analysis, until you reach some level which effectively "knows" what is in the picture. This is how our current understanding of the visual cortex goes-- you have cells with different receptive fields, tuned to different stimulus orientations and movements, which all process the incoming scene in parallel, and in communication with higher-level regions of the brain.

It would be interesting, then, to see what sensory-processing neuroscience and parallel programming could lend one another. Could the architecture of the visual cortex be used to guide design of a parallel architecture for image recognition? Assuming regions like the visual cortex have been evolutionarily optimized, an examination of the parallel architecture of the visual processing system could tell us a lot about how to best organize information flow in parallel computers, and how to format the information which passes between them. Or in the other direction, could design of image-recognition algorithms for massively parallel computers guide experimental analysis of the visual cortex? If we tried to solve for the optimal massively-parallel system for image processing, what computational tasks would the subunits perform, and what would their hierarchy look like-- and could we then look for these computational tasks and overarching structure in the less-understood higher regions of the visual processing stream? It's a bit of a mess because the problem of image processing isn't solved from either end, but that just means each field could benefit from and help guide the efforts of the other.

So! Brains are awesome, and Google should hire neuroscientists. Further reading:

NIPS: Neural Information Processing Systems Foundation

Cosyne: Computational and Systems Neuroscience conference

Introduction to High-Performance Scientific Computing (textbook download)

Message-Passing Interface Standards for Parallel Machines

Google on Machine Learning with Quantum Algorithms

Quantum Adiabatic Algorithms employed by Google

Optimal Coding of Sound

This is the driving motivation behind much of the work that goes into quantum computing. Because bit state in a quantum computer is probabilistic rather than binary, the computer operates in a fundamentally different way, and we can design algorithms which take such differences into account. One vivid example is Grover's Algorithm for searching an unsorted array. Here's a good description from Google labs:

Assume I hide a ball in a cabinet with a million drawers. How many drawers do you have to open to find the ball? Sometimes you may get lucky and find the ball in the first few drawers but at other times you have to inspect almost all of them. So on average it will take you 500,000 peeks to find the ball. Now a quantum computer can perform such a search looking only into 1000 drawers.

So if you were opening one drawer a second, the traditional algorithm would take you an average of six days to run, while the quantum algorithm would take you a little under 17 minutes.

(Now, say each of those million drawers represented a different combination of letters and numbers, and you were trying to find the drawer/combination which corresponded to the password to someone's email account. Encryption standards which would be secure against attacks from a traditional computer are easily bypassed by quantum algorithms.)

While quantum computing still has a ways to go, parallel programming is already providing another alternative to traditional computer architecture. In parallel programming, you split your code up and send it to a number of computers running simultaneously (for our million-drawer problem: say you had 9 other people to help you, you could each search a different set of 100,000 drawers and it would only take 50,000 steps on average for the ball to be found.) So the trick in parallel programming is to figure out the right way to eliminate all the bottlenecks in your code and split up your task across processors as efficiently as possible.

Now, what about a task like image recognition? If you had a couple thousand processors at your disposal and a single image to feed them, what is the most efficient way for your processors to break up that image so that between them they can reconstruct an understanding of what it depicts? You might decide to give each computer a different small piece of the image, and tell it to describe what it sees there-- maybe by indicating the presence or absence of certain shapes within that piece. Then have another round of computers look at the output of this first batch and draw more abstract conclusions-- say computers 3, 19, and 24 all detected their target shape, so that means there's a curve shaped like such-and-such. And continue upwards with more and more tiers representing higher and higher levels of abstraction in analysis, until you reach some level which effectively "knows" what is in the picture. This is how our current understanding of the visual cortex goes-- you have cells with different receptive fields, tuned to different stimulus orientations and movements, which all process the incoming scene in parallel, and in communication with higher-level regions of the brain.

It would be interesting, then, to see what sensory-processing neuroscience and parallel programming could lend one another. Could the architecture of the visual cortex be used to guide design of a parallel architecture for image recognition? Assuming regions like the visual cortex have been evolutionarily optimized, an examination of the parallel architecture of the visual processing system could tell us a lot about how to best organize information flow in parallel computers, and how to format the information which passes between them. Or in the other direction, could design of image-recognition algorithms for massively parallel computers guide experimental analysis of the visual cortex? If we tried to solve for the optimal massively-parallel system for image processing, what computational tasks would the subunits perform, and what would their hierarchy look like-- and could we then look for these computational tasks and overarching structure in the less-understood higher regions of the visual processing stream? It's a bit of a mess because the problem of image processing isn't solved from either end, but that just means each field could benefit from and help guide the efforts of the other.

So! Brains are awesome, and Google should hire neuroscientists. Further reading:

NIPS: Neural Information Processing Systems Foundation

Cosyne: Computational and Systems Neuroscience conference

Introduction to High-Performance Scientific Computing (textbook download)

Message-Passing Interface Standards for Parallel Machines

Google on Machine Learning with Quantum Algorithms

Quantum Adiabatic Algorithms employed by Google

Optimal Coding of Sound

Labels:

computing,

neuroscience,

pattern recognition,

programming,

research,

technology

Sunday, October 11, 2009

Steven Strogatz's Sync

Steven Strogatz's wonderful book Sync discusses how synchrony in biological networks is not only common, but neigh-inevitable. His opening discussion of fireflies is particularly vivid: along some riverbanks in Southeast Asia, populations of fireflies stretching for miles will all flash in synchrony, a phenomenon which baffled western explorers for decades. It turns out the effect is easy to replicate in a model-- say you have a collection of periodic oscillators which fire a burst of light at their peak and then reset, you can achieve synchrony if you make it so that each oscillator, when it fires, bumps its neighbors forward a bit in their cycles. Because firing induces a forced reset of the cycle, oscillators will be pushed forward in their cycles until they fall into sync, and then stay locked there; this effect takes off in small groups and quickly grows until the entire network is firing together.

The important points here being that a) neurons do this too (in fact it can be hard to get spiking neural networks to stop doing this) and it's really probably important to coding somehow, and b) you guys, fireflies are totally attempting to form some sort of massive insect-based consciousness here.

You can read more on the subject in the preview of the first chapter and a half, posted on Google Books.

The important points here being that a) neurons do this too (in fact it can be hard to get spiking neural networks to stop doing this) and it's really probably important to coding somehow, and b) you guys, fireflies are totally attempting to form some sort of massive insect-based consciousness here.

You can read more on the subject in the preview of the first chapter and a half, posted on Google Books.

Monday, September 21, 2009

Computational Neuroscience Links

Computational Neuroscience on the World Wide Web-- a pretty comprehensive resource on comp neuro labs, tools, and conferences.

Labels:

compbio,

neuroscience

Friday, July 3, 2009

23 Questions

Darpa's 23 mathematical challenges in modern scientific efforts, with a distinct emphasis on computational biology.

Larry Abbott's 23 questions in computational neuroscience.

23 is a reference to the father of all such lists, Hilbert's 23 problems posed to the International Congress of Mathematicians in 1900; these problems shaped much of 20th century mathematics, and some remain unresolved to this day.

Larry Abbott's 23 questions in computational neuroscience.

23 is a reference to the father of all such lists, Hilbert's 23 problems posed to the International Congress of Mathematicians in 1900; these problems shaped much of 20th century mathematics, and some remain unresolved to this day.

Labels:

compbio,

computing,

mad science,

math,

neuroscience,

research

Tuesday, April 21, 2009

The Wason Selection Task

The Wason Selection Task, a fun little test of logical reasoning commonly used in experimental psychology.

Labels:

neuroscience

Saturday, April 18, 2009

Sound to Pixels

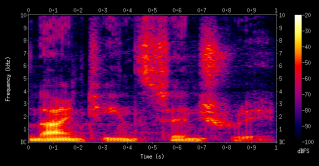

Found a nifty article about a piece of digital music software called Photosounder, posted on a blog called Create Digital Music. Photosounder is an image-sound editing program-- that is, music creation is done visually, by drawing and editing the sound's spectrogram. The videos in the CDM article show some of the ways in which this software is being used; it's pretty impressive stuff. I also found this plugin for winamp which produces a simple spectrogram of your music as a visualization, if you're just curious to see what the music you're listening to would look like.

The spectrogram is actually a good representation of how sound is coded in the brain-- the cochlea in your ear breaks down sound input into narrow frequency bands, just as we see on the X axis of the spectrogram, and cells in each frequency band fire in proportion with the intensity of sound at that frequency (so, you have a physical structure in your ear which performs a Fourier transform-- how cool is that?) As seen in this video, a single sound object usually consists of several harmonics, and a full spectrogram can be quite complex-- and yet our brain can easily segment that spectrogram to identify different instruments, even when there's a lot of frequency overlap. We are even able to focus our attention on one specific instrument, which means selectively responding to one particular batch of signals as they move up and down across frequency channels/cell populations. Brains are pretty awesome, guys.

(And on another note: as you can see in the aforelinked video, one of the easiest ways to pick out one instrument from a spectrogram is to look for elements which "move together" in time/across the spectrum-- this notion drives a lot of work in both auditory processing and the corresponding problem of object recognition in computer vision.)

Labels:

blogs,

computing,

music,

neuroscience,

sync

Monday, April 13, 2009

Supernumerary Phantom Limbs

People with amputated limbs sometimes experience the presence of a phantom limb which continues to exist where their old limb was-- they can perceive pain or temperature changes in the missing limb, and have a sense of its orientation. It seems like this is probably caused by lingering activity in the parts of the brain which previously processed such sensory data coming from the limb.

Well, it turns out that it's also possible to experience a supernumerary phantom limb: for instance, one Swiss woman reported feeling the presence of a pale, translucent third arm following a stroke. Researchers have found that this woman's brain treats the arm just as it would a real one-- when she uses it to perform a task like scratching an itch, an MRI of her brain shows activity in regions corresponding to her sense of touch, as well as activity in the visual cortex from where she perceives the arm's presence. And it relieves the itch where she'd scratched it, too.

Well, it turns out that it's also possible to experience a supernumerary phantom limb: for instance, one Swiss woman reported feeling the presence of a pale, translucent third arm following a stroke. Researchers have found that this woman's brain treats the arm just as it would a real one-- when she uses it to perform a task like scratching an itch, an MRI of her brain shows activity in regions corresponding to her sense of touch, as well as activity in the visual cortex from where she perceives the arm's presence. And it relieves the itch where she'd scratched it, too.

Labels:

mad science,

neuroscience,

research

Sunday, October 19, 2008

Abandoned Russian Lab

Abandoned Russian Army Lab-- cool more for artistic merit than anything else; a bunch of photos taken inside an old (and hastily abandoned) Russian Army neuroscience lab. Brains in jars!

Labels:

art,

mad science,

neuroscience

Subscribe to:

Posts (Atom)